It’s no secret that I’m a big advocate of Tabular Editor (TE). I recently wrote about it on my own website (cheeky little plug for Greyskull Analytics there…).

In my time working with Advancing Analytics, I’ve continued to use it with Databricks lakehouses and have even developed our own solution accelerator with Tabular Editor to get people up and running with Power BI semantic models on the lakehouse fast.

ANTICIPATING XMLA

With the announcement of Fabric in May, one of the very first features I was looking out for was the availability of the XMLA endpoint for Fabric semantic models. Alas, whilst it was there, the endpoint was only read only.

That is until August when support for write operations was announced!

New OPPORTUNITIES

Why the excitement on my part? Well to take advantage of all the great features in Tabular Editor, you really need to be able to connect and write via XMLA, be that for doing CI/CD pipelines or by making edits directly on the dataset.

What great new features does Tabular Editor unlock that you can’t just do in the online Power BI modelling experience in Fabric… tons!

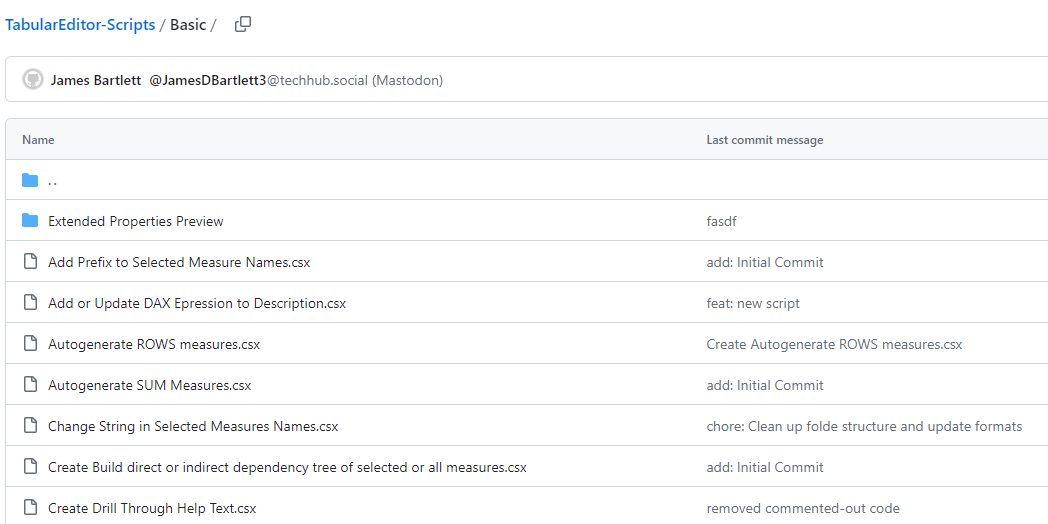

Firstly, you can take advantage of the powerful scripting features. Need to create time intelligence measures at a click of a button? Scripting has your back! This and many other examples of the automation you can achieve with Tabular Editor are available with via the community maintained scripts repo hosted by the folks at powerbi.tips.

How about checking the quality of your model from a best practice perspective? Best Practice Analyzer has you covered.

Add to the mix the ability to source control your models at a more granular level (yes, .pbip format does now allow you to better source control models built in Power BI desktop, but the serialised format in TE is better… don’t @ me!) and using Tabular Editor for me becomes a no brainer, especially if you bake in CI/CD pipelines (*cough* more Greyskull Analytics *cough*).

DIFFERENTIATING EXPERIENCES

Unbelievably, especially for Direct Lake semantic models, there are other no brainer features TE gives you compared to the online experience. For example, you can’t rename columns or table names online. It’s a widely adopted best practice to give both tables and columns a friendly name in a semantic model. You don’t want self service users to see things like dim_date or fact_sales when building reports on your model, as these are data warehousing technical names and concepts that will be unfamiliar and unintuitive to the average business user. Even proper cased column names like “ProductFamilyGroup” are a bit clunky, so changing these display names in Power BI is common. Tabular Editor does give you the capability.

From workflow perspective, you have a few different options available. Firstly, it’s worth noting that you can’t edit the auto-generated, default semantic model that gets created alongside Lakehouses and Warehouses, it has to be a new semantic model. If you’re using Tabular Editor 2 (the free open source version) then your best bet is to build a skeleton model in the online experience, then connect to it using TE to make your edits. You can either save directly back to the connected model (probably not my recommended approach) or download and save a copy of the model offline to be source controlled and deployed via your means of choice.

TE3

If you have Tabular Editor 3 Enterprise edition (and I wholly endorse that you should!), those fabulous Tabular Editor folks have included in their latest release the ability to build Direct Lake semantic models from scratch by connecting to your Fabric lakehouse or warehouse items. They’ve detailed a handy guide here.

CONCLUSION

So, a ton of excitement from me. For Power BI developers who were already embracing pro-code development with 3rd party tools, we can now re-use an awful lot of the productivity elements that have been built for the previous incarnation of Power BI modelling, allowing us to speed up development lifecycles for semantic models as well as being able to build repeatable and robust modelling patterns.

There does come one word of warning, which is to make sure you’re aware of current limitations with Direct Lake semantic models. The full list is available here and it’s worth noting that some of these tripped me up the first time I worked with Direct Lake.

But overall, being able to use one of my favourite tools with my new favourite data platform makes me a happy boy!

We’d love the opportunity to show you how we can use Tabular Editor to enhance your Fabric journey. If you’re getting started looking at Fabric as a viable option for your business, please don’t forget to check out our Fabric proof of concept offering.

Author

Johnny Winter