This is the third in a series where I look at all of the resources common to a Data Lakehouse platform architecture and what you need to think about to get it past your security team.

Building upon Azure Databricks, I'll move from the compute engine to our blob and data lake storage. Things are a little simpler to secure but the plethora of options available can have significant impacts on usability and cost so it's important to understand the impact before baking them into your design.

A Data Lakehouse reference architecture

Blob or Data Lake

The first thing to think about is the type of storage we're talking about. I'll cover both blob storage and Azure Data Lake (Gen2) in this post but it's important to know that there are some feature differences between them which might impact your strategy.

The majority of security-related features you'll want to consider however, are common to both types.

Private Endpoints

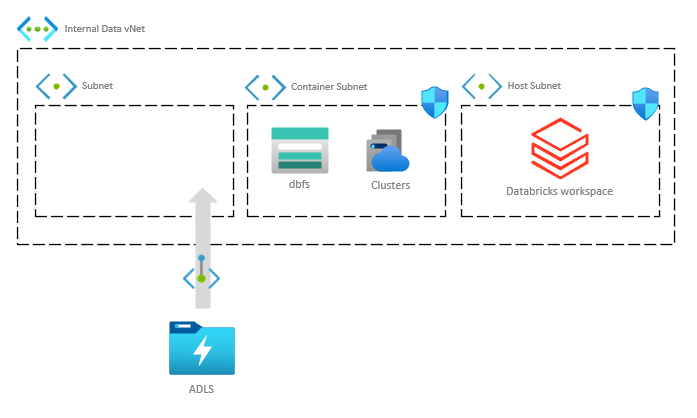

This is the most important configuration for securing our storage accounts and is also a key part of our overall VNet design pattern. A Private Endpoint gives us the ability to restrict connections to our storage account through a single IP address provisioned in a subnet in our VNet. This means we can disable or restrict the Public Endpoint through the firewall configuration options making the storage account only accessible from inside your VNet or connected network (hopefully your machine!)

Data Lake Storage connected to a VNet via a Private Endpoint

A middle-ground solution would be to keep the Public endpoint and use the firewall configuration to restrict access to selected networks, such as our VNet or other networks. Public endpoints aren't explicitly bad especially if the use case requires it, but even with firewall restrictions, the storage account is still visible on the public internet which might be enough to rule this approach out.

Enable blob public access

This setting allows clients to connect to your storage account containers without authentication. It's worth highlighting that with a private endpoint enabled, this setting would not bypass that endpoint. The use of the word "public" is a little misleading in that respect.

This is disabled by default which is the configuration we should stick with unless you have a scenario that would require anonymous access. I wanted to highlight it though as there's usually an assumption this is enabled by default.

Configure anonymous public read access for containers and blobs - Azure Storage | Microsoft Docs

Soft Delete

With soft delete for Azure Data Lake storage (Gen2) now publicly available as of Dec 2021, this feature can be turned on at both the blob and container level in either storage account type. There are a few known issues with the hierarchical namespace but its an ever decreasing list and nothing we should be concerned about here.

Be careful with costs on this one though. Data landed into your blob storage account might never be deleted or overwritten so cost impacts would be negligible. In your data lake however, where files could be overwritten regularly you'll be storing snapshots of that data for the retention period you set. Costs can quickly balloon so be sure to set this low to test it out first.

Customer Managed Keys

This is another feature common to both Data Lake and Blob storage. All of your data is encrypted at rest by default but the standard setting uses "Microsoft-managed keys".

This can conjure mental images of Microsoft employees sneaking peeks at your data when in reality it's all managed automatically with no interaction and no user visibility. Microsoft or not. This approach makes it simple to set up with no admin overhead, looking after, sorting, and rotating keys.

The main reason to consider customer managed keys is if your organisation has specific key specification, or rotation requirements. Otherwise, it's another aspect of the data platform that needs to be managed and is best left off the shopping list.

Ref: Azure Storage encryption for data at rest | Microsoft Docs

Enable infrastructure encryption

Known as double encryption, it sounds more secure, which can't be bad right? With additional encryption of your data, you WILL see a performance impact. It's just not worth it unless it's an established requirement for your organisation and not just something that sounds good. A lot more detail on the specifics can be found here: Enable infrastructure encryption for double encryption of data - Azure Storage | Microsoft Docs. Its worth highlighting that you can't change your mind on this one. It must be done when the storage account is provisioned.

Azure Data Lake Storage

Azure's data lake storage is deployed like a standard storage account in Azure but with the hierarchical namespace option selected which gives us fine-grained security and scalability, ideal for analytical workloads. It also brings a change in the architecture under the hood which is why some features aren't available or may still be in preview. (at least at the time of writing).

These mainly pertain to data protection rather than security and networking though.

-

Point-in-time restore for containers

-

Versioning for blobs

-

Blob change feed

From a security and networking perspective there is one unique feature worth remembering and you don't even need to turn anything on:

Access Control Lists (ACLs)

The ability to secure individual "folders" within your data lake is a significant benefit to the Data Lakehouse. It's a feature not shared with regular blob storage though, which can only be secured at container level.

Generally speaking, this is why you'd use the blob storage account as a repository of raw data. It's cheaper than the data lake storage type and with the lack of granular security, you'll have blanket security across all data allowing administrative access only.

Azure Blob Storage

Enable storage account key access

Use of storage account keys is a fairly standard way of interacting with your data but it's not the most secure as keys can be compromised. Rotating these keys regularly helps but there's still a higher risk than using Azure AD authentication options. It's also important to remember that we also still have our VNet protecting our resources from external intrusion.

It is entirely possible to disable account keys and SAS keys on storage accounts but if you intend on using storage mounts in Databricks with your blob storage account, you need an account or SAS key to connect. If these are disabled as a matter of policy, exceptions can be granted but you will likely need them for connectivity in your platform.

Summary

All of the features I describe above are the likely decision points that need to be addressed and as mentioned there's a real risk to impact platform usability and cost by selecting the wrong combination.

Author

Craig Porteous