Data is among the most valuable assets for any organisation. Without data, the ability to make informed decisions is diminished. So it stands to reason that Data Quality is incredibly important to any organisation. If data doesn’t meet the expectations of accuracy, validity, completeness, and consistency that an organisation sets it, then the data could have severe implications for the organisation. Conversely, if data does meet those expectations, then it is a real asset that can be used to drive value across an organisation.

Impact of Data Quality

The impact of poor quality data has been estimated to have cost the U.S. economy $3 Trillion in 2016. We’re now 6 years on, the size of the U.S. economy is much larger; the volumes of data more vast; the complexity of that data has grown - so has the impact of bad data also grown in value? That is difficult to assess - because the original estimate was based on a load of assumptions like the time and number of employees engaged in correcting, or working around, poor data quality, as well as the assumed cost of poorly informed decisions - but for the sake of simplicity, we can assume that this cost to a single economy has also grown.

We can safely assume this because a lot of organisations do not have data quality at the top of the priority lists. Why might this be the case? Because monitoring data quality and correcting poor quality data is hard. If it was easy, every organisation would have a strategy and method for tracking and improving data quality.

Often, inertia - driven by an overwhelming amount of information to the risks - sets in as it’s difficult to know where to start. So, where do you start?

Determine Data Quality

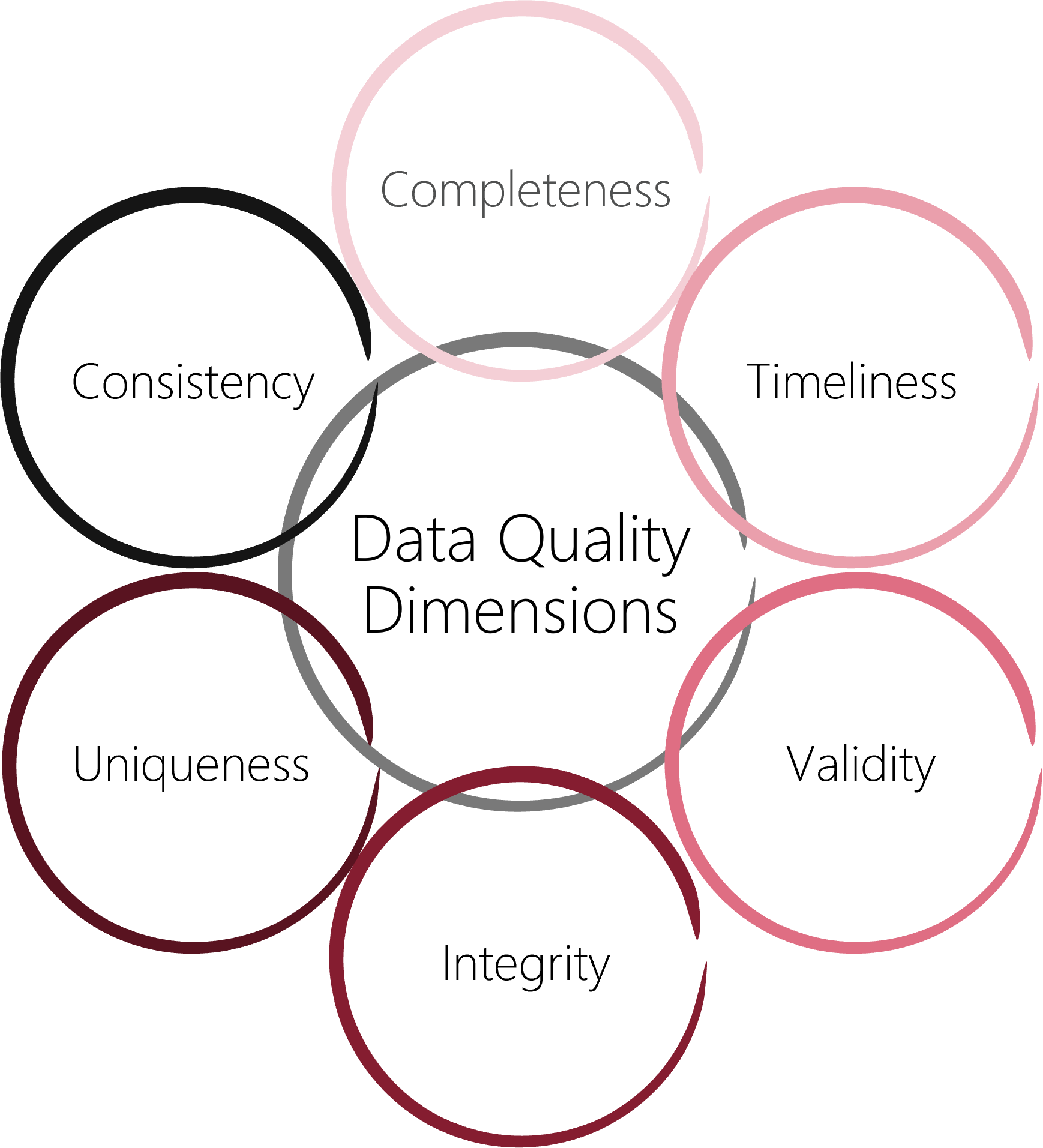

Handily, there are industry defined dimensions for assessing data, as defined by the Data Management Association (DAMA).

Completeness

Completeness is defined as a measure of the percentage of data that is missing within a dataset. For products or services, the completeness of data is crucial in helping potential customers compare, contrast, and choose between different product or services. For example, if a product description does not include an estimated delivery date (when all the other product descriptions do), then that “data” is incomplete.

For an organisation, incomplete data can have a profound impact on efforts like marketing campaigns. For example, if a customer doesn’t fill in their address there’s no way for them to be engaged with physical marketing.

Timeliness

Timeliness measures how up-to-date or antiquated the data is at any given moment. For example, if you have information on your customers from 2008, and it is now 2022, then there would be an issue with the timeliness as well as the completeness of the data.

When determining data quality, the timeliness dimension can have a tremendous effect — either positive or negative — on its overall accuracy, viability, and reliability. The more up-to-date data is, the more relevant decisions and insight can become.

Validity

Validity refers to information that fails to follow specific company formats, rules, or processes. For example, many systems may ask for a customer’s birthdate. However, if the customer does not enter their birthdate using the proper format, think US and ISO date formats, the level of data quality becomes compromised.

Integrity

Integrity of data refers to the level at which the information is reliable and trustworthy. For example, if your database has an email address assigned to a specific customer, and it turns out that the customer supplied a fake email address, then there’s an issue of integrity with the data.

Uniqueness

Uniqueness is a data quality characteristic most often associated with customer profiles, such as a Single Customer View.

Greater accuracy in compiling unique customer information, including each customer’s associated performance analytics related to individual company products and marketing campaigns, is often the cornerstone of long-term profitability and success.

Consistency

Consistency of data is most often associated with analytics. It ensures that the source of the information collection is capturing the correct data based on the unique objectives of the department or company, and doing so in a consistent manner. If the system is collecting pertinent data in one field and that field switches, that would not be consistent.

Monitoring Data Quality

Data Quality won’t be magically fixed over night and could take many months, or years, to iteratively improve. In order for the quality to improve, the quality of the data needs to be monitored.

There are plenty of off-the-shelf products, which can be bought for not insubstantial amounts, such as Informatica, Ataccama, and many others. There also remains an option to build a solution that suits your organisation. In a later post, we’ll explore one option for building a solution.

Takeaway

Regardless of which approach you take to monitor data quality, build or buy, I hope this post has given you a deeper understanding of what data quality is and why monitoring, and improving, data quality is important.

This post was originally published on UstDoes.Tech

Topics Covered :

Author

Ust Oldfield